Enhancing LLM Reasoning with Advanced Policy Optimization: The Power of GRPO

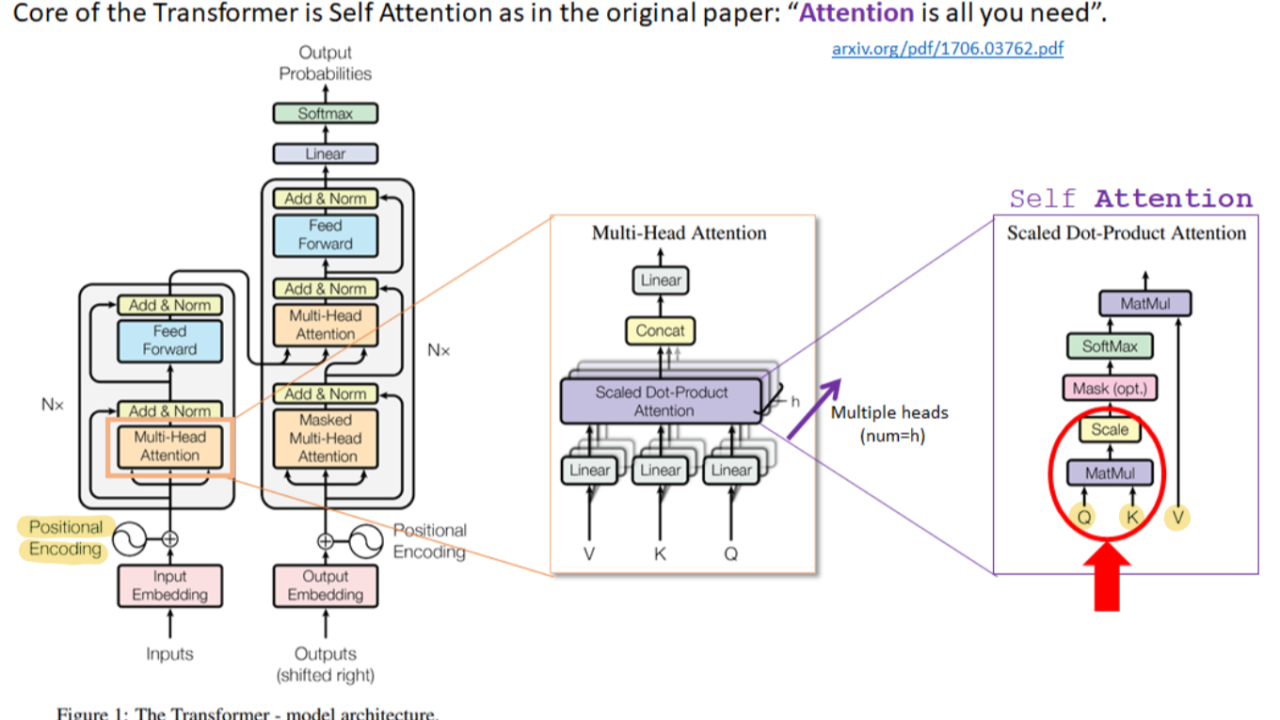

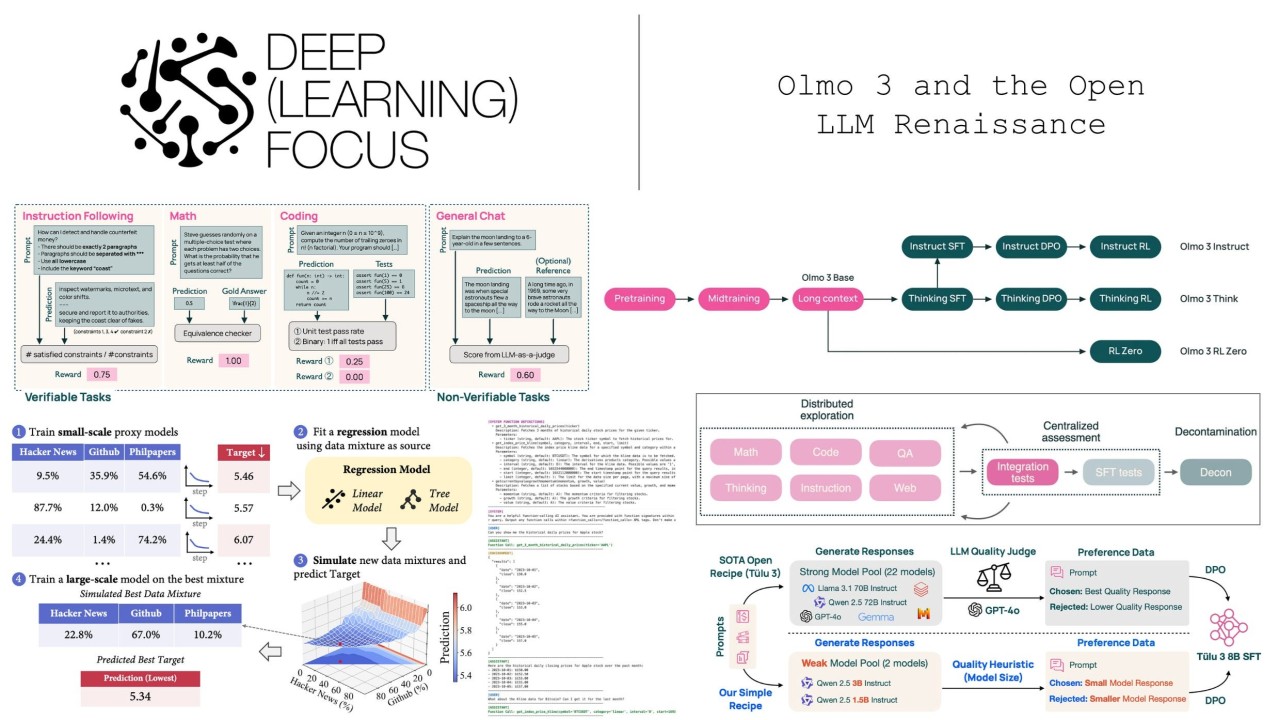

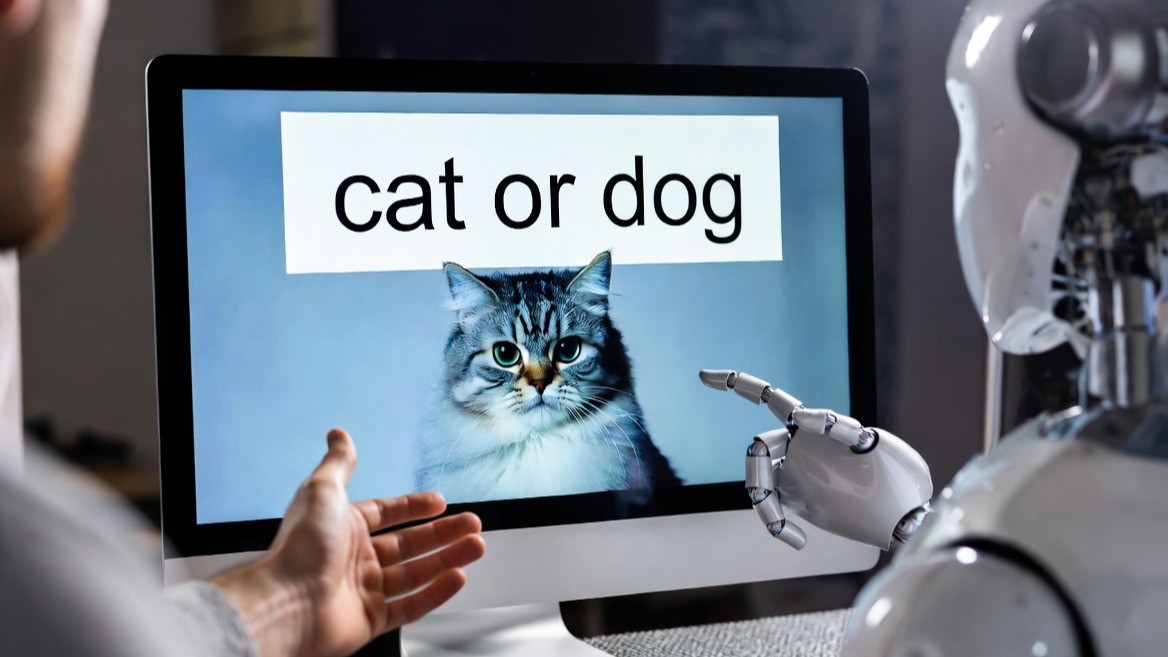

In the world of artificial intelligence, large language models (LLMs) are increasingly relied upon for complex reasoning tasks, from solving math problems to analyzing code. But getting these models to